Datasets:

Visual Long-Context Dataset: PaperScope

As pioneers in exploring the relationship between the MDL of image bases and model capacity, we deeply recognize the scarcity of large-scale visual long-context datasets, particularly those suitable for training high-capacity vision models. In particular, inspired by DeepSeek-OCR, we envision that vision models can achieve autonomous reasoning through scalable expansion, replicating the remarkable success of large models in the language domain while alleviating the computational bottlenecks commonly encountered in LLMs.

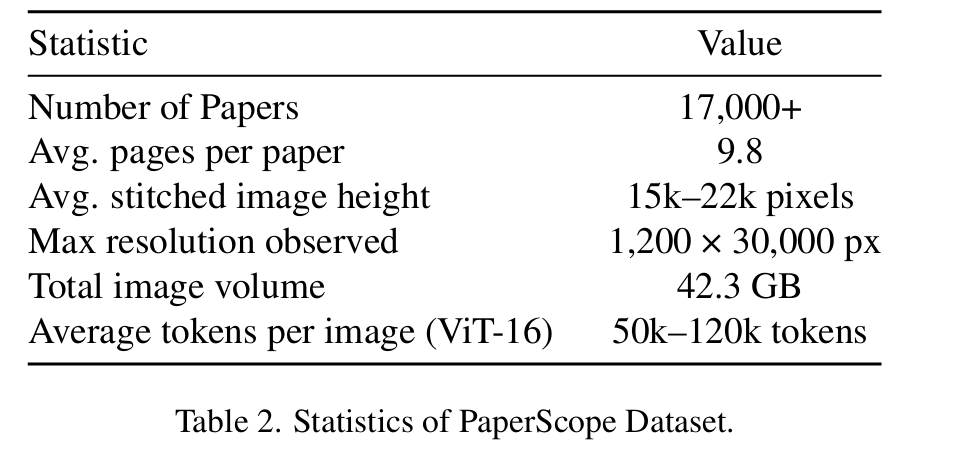

In this context, a more realistic training dataset for large vision models is required to validate their potential to be trained under the visual long-context. Recognizing the demand for higher-quality visual long-context images, we develop a dataset called PaperScope. This novel dataset consists of 17,365 high-resolution long-context images of papers collected from ICLR 2024 and 2025. It provides a valuable resource for studying visual redundancy and long-context understanding in vision models.

PaperScope contains more than 17,000 long-form paper images, each averaging 15k–22k pixels in height and reaching up to 30k pixels in extreme cases. This yields tens of thousands of tokens per image under standard ViT patch sizes, making the dataset a natural stress test for long-sequence Transformers. Compared with page-level document datasets, PaperScope maintains the global continuity of academic layouts, including multi-column text, mathematical expressions, figures and plots, tables, and reference sections. This rich multimodal structure provides a realistic environment for examining how visual models process highly heterogeneous and semantically dense content.

A distinctive characteristic of PaperScope is the substantial redundancy inherent in academic formatting. Similar textual layouts, repeated structural motifs, and homogeneous backgrounds create a setting where large portions of visual tokens contribute limited new semantic information. Such redundancy makes the dataset particularly well-suited for evaluating token merging, pruning, orthogonal basis learning, and other forms of semantic compression. These properties align naturally with our Orthogonal Filtering framework, enabling systematic investigation of how model capacity interacts with token reduction and how larger models achieve complete semantic reconstruction with fewer visible tokens.

Beyond reconstruction and token-efficiency tasks, PaperScope enables a wide range of long-context visual benchmarks. Tasks such as document-level semantic prediction, figure–caption association, section boundary identification, or layout-aware classification can all be performed at the full-paper level, requiring models to integrate information across extremely long spatial spans. The dataset also supports studying scaling laws between token visibility, model size, and semantic fidelity, providing empirical insights into how expanding model capacity mitigates the need for dense visual tokens—an observation central to the empirical regularities established in our work.

Finally, PaperScope is curated entirely from publicly accessible research papers and contains no private or sensitive information. Its construction adheres to conference usage policies, and its purpose is strictly academic: to advance research on efficient long-context visual modeling, image redundancy analysis, and scalable Transformer architectures. As far as we are aware, PaperScope represents one of the largest and most semantically coherent datasets of long-document images, offering an invaluable resource for exploring the limits of visual token compression and the training of next-generation large vision models.

- Downloads last month

- 8